Here’s a little sneak peek to our upcoming release of Testlab with Exploratory testing. Sounds familiar, right? Didn’t the tool already have the feature? Yes we did – kind of. We just re-created it again to better work with all other features that Testlab has today. Read more for what we mean with exploratory testing, what it will enable you to do and how the Testlab will change.

What is exploratory testing?

Like for many things, there are many wording for the definition of exploratory testing. What is common for many of these, if not all, is that testing is documented while the testing is being done. How exactly the exploratory testing is documented varies – as it varies for other forms of testing. Exploratory testing if often strongest when developing completely new features – there pre-planning the test cases is often inefficient and slow, and where the most efficient way of planning the testing is doing it while doing the actual testing. It is also very important that all testing works perfectly together and supports the development.

From Meliora’s point of view as a test tool maker we just want to make it as easy as possible for testers to concentrate on testing while giving access to relevant information, making documentation of testing easy and keeping the “tool overhead” away. Also, let’s say it straight: Exploratory testing is much more fun than executing pre-scripted test cases like a robot, so one important motivation for upcoming change is also allow testers do their work in more intellectually rewarding way.

Testing something – not just anything

When testing with any methodology it is important that tester has very clear focus on what it is being tested and why. The tester should not be only executing a test case, but trying to find bugs, making the quality known ( that certain features are verified to work), finding out about the performance, usability or what ever the goal of testing is. When the reason for testing is clear, the work will be more efficient. Human can vary what they do on the fly and concentrate on what matters the most at any given moment. Again, testing can be done in countless of ways, but very often testing is done against some change or some feature – A requirement, a user story, a task, bug, change request or something alike. If these things are tracked ( and they really should! ), then the testing of these things should be tracked as well. It doesn’t need to be more cumbersome than it is. Just a decision – should this be tested? If yes, test it and when it’s done, mark it as done. Simple, and gives you knowledge about things that have been analytically tested.

Cooperation with the development

In perfect world ( that we all are trying to make, right? ) the testing supports the design and the development of the software. Why there is often a room for improvement in this is that the findings of testing are not that easy to utilize. What the right tools can do to mitigate the problem is to better link the testing to what is being done – in real time. This is a boon for testers and developers alike – it is much easier and rewarding to do the testing against something new and important, and for developers and the whole project it is great to get results fast. This will also help the management to make the right decisions when the test results are more aligned with what is being done – in opposition of just repulsive big list of weakly categorized findings, or even worse, just a passrate as a number.

Test documentation formatting and re-usability

Documentation is the boring stuff, and doesn’t the boring kill the creativity? Do I really have to do that?

The focus in exploratory testing is on what is being tested and learning how it works – not executing traditional “pre-scripted” test cases. Here in this article we consider a test case in a bit more broader sense – it is a container for testing. Test case can be a traditional one or it can be a target of exploratory testing. The reason to split the work from “I explored new features for a day” to “I explored feature X, Y and Z” is to make communication and returning part of your work easier.

Yet another thing that varies a lot depending on what kind of testing is being done is how test cases are written. There is no one solution that fits all – when testing is done by someone new to the system, more detailed test cases are needed. Another reason for detailed test cases might come from regulations or business criticality – if you want to be 100% sure that certain path is to be tested, you need to write it down ( or automate it as another option ). Then again, detailed writing is so slow to do and maintain, that on many occasions it just not worth it. That time is better spent on something else.

That all said, it is good to consider the intended time-span of the testing work. How likely it is that you will continue the work you leave it after you are done with your exploratory testing? Will someone else need to read what you learn / test? If you might need the description of how you test later, then you should consider documenting the testing taking that in to account. The difference is not probably that big when documenting, but when reading what has been done it might have a bigger impact. If you can write your exploratory testing straight to a format that can be reused, you will save a lot of time later.

What comes out of exploratory testing

Exploratory testing can clearly find bugs and create ideas of enhancements, so one possible output is clearly issue tickets ( as that is one very good way of handling of these findings ). Where exploratory testing sometimes is more vague is that it might not tell if some areas should still be tested, how the testing is connected to different changes and so on. On small projects this might be enough, but on larger ones one needs to be able to see what has been tested before changes, what after the changes, what were the results, what are the areas where you find more issues etc. You can get this with exploratory methods as well – you just need to split the testing in to test cases and tell the tool what is the target of your testing. Managers will get detailed reports that map to bolts and nuts of system without extra work and testers can easily document what they do while they test.

Ok, that is how it works in theory but what can I actually do with exploratory testing in Testlab?

Just a minute! Before the actual testing, let’s back up on to thing, system under test, that you are testing. That’s the reason we are doing the work, Isn’t it? When we do exploratory testing, we rarely start from the scratch. We have some sort of specification, a task or maybe an issue that we want to test. In Testlab you pick one or maybe a collection of these, tell Testlab the target of your testing ( Like Milestone, version or environment ). Testlab will then create an empty Testcase for you where you can document what you test while you do it. You can create or more tests per thing you test – just as you like. You can document your testing how you want- you can create straight re-usable test cases with preconditions and steps if you like or write what you did in one Rich text field as description – or anything in between. All changes are saved in history data, all test results are saved in reportable format and they are linked to thing you are testing – all set for Enterprise grade visibility while concentrating on the essential. When talking about documented testing there simply isn’t any overhead on this way of working. At the same time other people working on a project will be able see how each thing: requirement, task or issue was tested. That all said – you still can just test ( test what you want, write down what you want ), but you really might want to utilize the link between testing and project assets. They are well spent seconds and minutes!

Just a minute! Before the actual testing, let’s back up on to thing, system under test, that you are testing. That’s the reason we are doing the work, Isn’t it? When we do exploratory testing, we rarely start from the scratch. We have some sort of specification, a task or maybe an issue that we want to test. In Testlab you pick one or maybe a collection of these, tell Testlab the target of your testing ( Like Milestone, version or environment ). Testlab will then create an empty Testcase for you where you can document what you test while you do it. You can create or more tests per thing you test – just as you like. You can document your testing how you want- you can create straight re-usable test cases with preconditions and steps if you like or write what you did in one Rich text field as description – or anything in between. All changes are saved in history data, all test results are saved in reportable format and they are linked to thing you are testing – all set for Enterprise grade visibility while concentrating on the essential. When talking about documented testing there simply isn’t any overhead on this way of working. At the same time other people working on a project will be able see how each thing: requirement, task or issue was tested. That all said – you still can just test ( test what you want, write down what you want ), but you really might want to utilize the link between testing and project assets. They are well spent seconds and minutes!

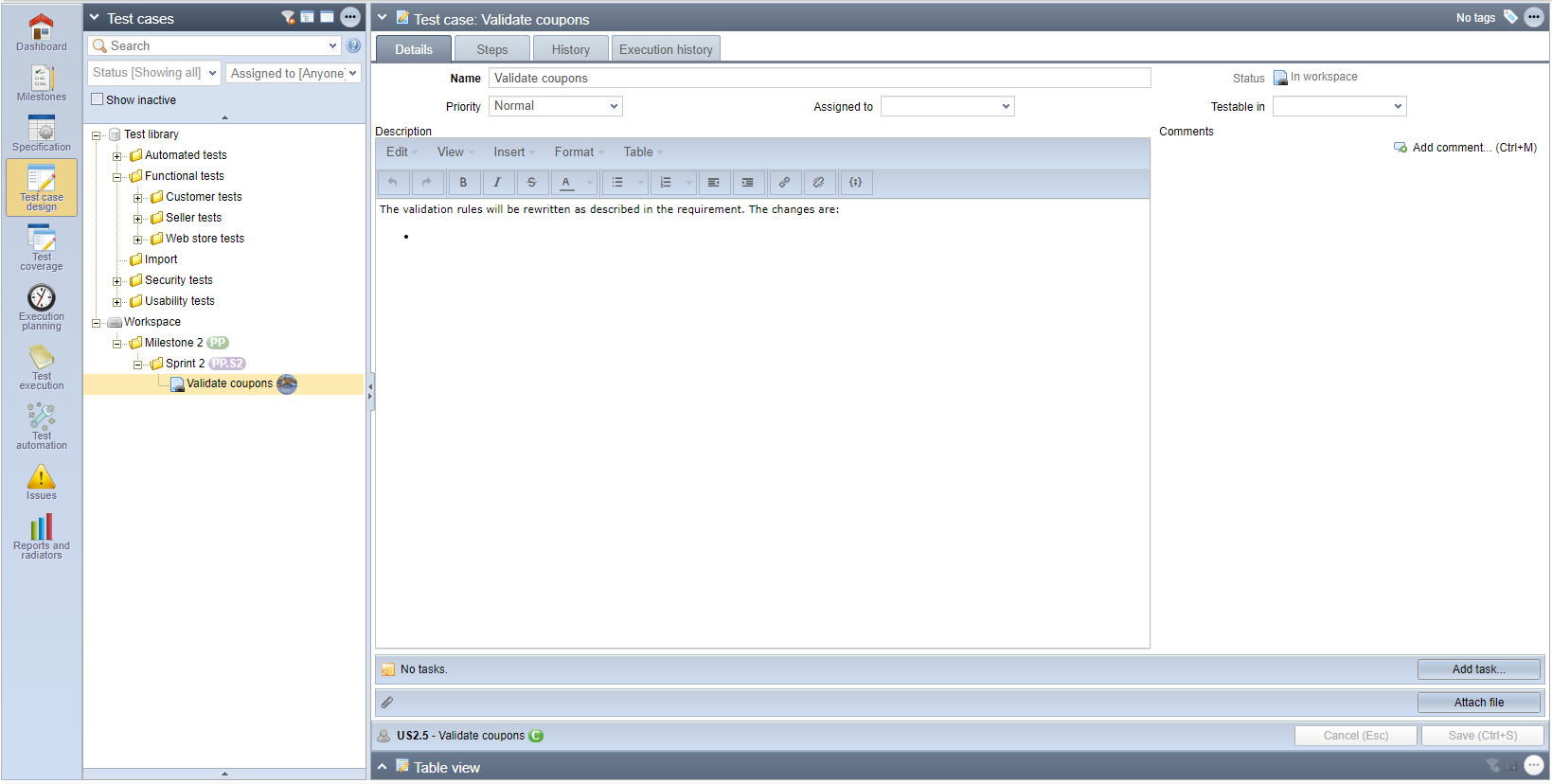

The test cases that were created this way are stored in a workspace. Workspace is a place where your test cases are stored in Milestone / sprint structure. If you intend your testing to be one-shot activity, you can leave them here. If you plan to re-use them, it is best to move the test cases to test case library so the test cases are easier to find.

Upcoming changes to the Testlab

While the upcoming change will allow users new approach to testing and new ways of working, the tool will work more or less like before with your existing test cases. Here’s the most important changes that we have planned so far:

- Specification: You’ll find a new “Test this” button that you can press to initialize testing the thing you choose. You’ll just tell the tool the target of testing(Test run) and off you go!

- Test case design: The current tree node will be renamed to “Test library”, and another root node will be added for workspace tests. The workspace tree will be formed automatically based on your milestones and sprints.

- Execution planning: Execution planning will be introduced features to pick things to be tested. When you drag & drop requirements to a test run, you will also be able to choose if you want to add new exploratory test cases for them, or if you want to continue exploratory testing on the subject. You can also drag & drop issues and tasks to be tested.

- Test execution: You will have an option for “Explorative mode” where you can edit your test cases while you test. You can also add new things to be tested.

- Issues: Like in specification, a new ” Test this” button will be added that can be used to initialize testing of the issue.

- Tasks: You’ll find a button to “Test this” also here.

- Test case revisions: Test case revision handling will be improved. New revisions will not be (automatically) created if test case has not been run. Also, non-description ( or test step) fields do not generate a new revision when changed.

Besides the changes related to Explorative testing, we’ll fix other things as well. There will be at least one visible change in the upcoming release:

- Three dot button:In the current version of Testlab there are many buttons visible all the time. To streamline the User interface, the less used features will be put behind a three button menu.

We need to write the disclaimer saying that all this might change – you know, we want to remain agile. If we get a Best Idea Ever, we might change something.

If you have any questions regarding the changes, please do reach us out. Like always, we value highly what we hear from you – our users, and we do read your suggestions about how our tool could work better for you.

(Pictures by Dall-E, our cool AI artist friend)